The Journey to a Front-end Monorepo: What I learned

Hey! I’m Berkay Aydin, engineering director and front-end architect at Jotform. In this article, I’ll share what I learned while restructuring our front-end architecture.

At Jotform, we have agile cross-functional teams, and we’re following the trunk-based development approach, which means we are directly pushing updates to our main branch. Our main branch is always production-ready and guarded by automated tests. Our production environment gets updated almost 300 times on a regular day.

We used to have a polyrepo structure, which created a different repo for every application and a few more for shared libraries. Every repo had its own configuration.

This structure was good for managing repos separately — as long as a repo had an owner. Our company was growing fast, and we didn’t manage repo ownerships well. Including more developers in the team resulted in breaking things.

The first problems we faced were

- Inconsistent Tooling: We had multiple linter, webpack, testing, and GitHub action configurations. We did similar things in different repos and had multiple build pipelines.

- Difference in development environment: Every team managed the development dependencies of their project with their own efforts.

- Hard-to-share code: We aimed to share code across multiple teams and projects, so we created common components and utilities inside another shared repository. Usually, we linked modules from webpack configs during development and published the package to a private registry to use in another application. The process was really slow and painful, and it resulted in a lot of code duplication.

- Unable to Manage Versions: We were creating private npm packages for code sharing. Once a shared library got an update, we updated every application individually. Our dependent application count was increasing very fast — we were updating 40-plus different repositories for a single package update. Unfortunately, we often forgot to update or chose not to update some applications, which resulted in our users seeing different UI for the same component in different applications. This was resulting our users seeing different UI for the same component on different applications. This was actually breaking our trunk-based development approach. If we forgot a low-used app, the app would use old packages for weeks.

- Hard-to-see affected applications: Once a developer made an update to a component library, it was hard to see all affected applications company-wide, which usually resulted in breaking features.

- Hard mode onboarding: Newcomers were struggling to find related components in these multiple codebases.

With these pain points in mind, we started looking for a recipe

The recipe is Monorepo

We started our journey with Yarn Workspaces. It offered a good speed in development. We combined it with Lerna in a fresh repository.

As a first step, we created our core config packages ( ESLint, TypeScript, Webpack, etc). A few weeks later we switched to Turborepo.

Then we started a long journey — moving all our packages to our monorepo took exactly a year. We started at the beginning of 2022 and finished at the end of 2022.

Our monorepo structure and tooling evolved a lot during the year. We dropped almost all our choices due to performance concerns and our experiences.

Here is the summary of our latest tooling;

- PNPM as package manager. It is super fast and efficient. It also has great workspace management tooling. You can use your packages from the latest version using the workspace protocol in package.json.

- NX as the monorepo orchestrator. NX is great so far, it offers the following key features:

Computational Remote Caching: If a commit doesn’t affect a library, NX helps you use previous builds of this library, which speeds up your build times. We have almost 250 packages in our monorepo, and we only build affected packages by the commit in seconds.

Dependency Graph: This is very helpful for developers, allowing them to see which packages will be affected by their updates.

- Syncpack helps us use the same dependency versions across all applications and libraries. This is very helpful for reducing bundle sizes and improving the developer experience.

- Danger JS helps us to request reviews for critical updates. Like, if an update affects multiple packages, someone created a new package or someone added a new dependency.

- Renovate Bot helps us manage our dependencies up-to-date and secure.

- Internal CLI: We created an internal CLI to let developers easily create new applications or libraries.

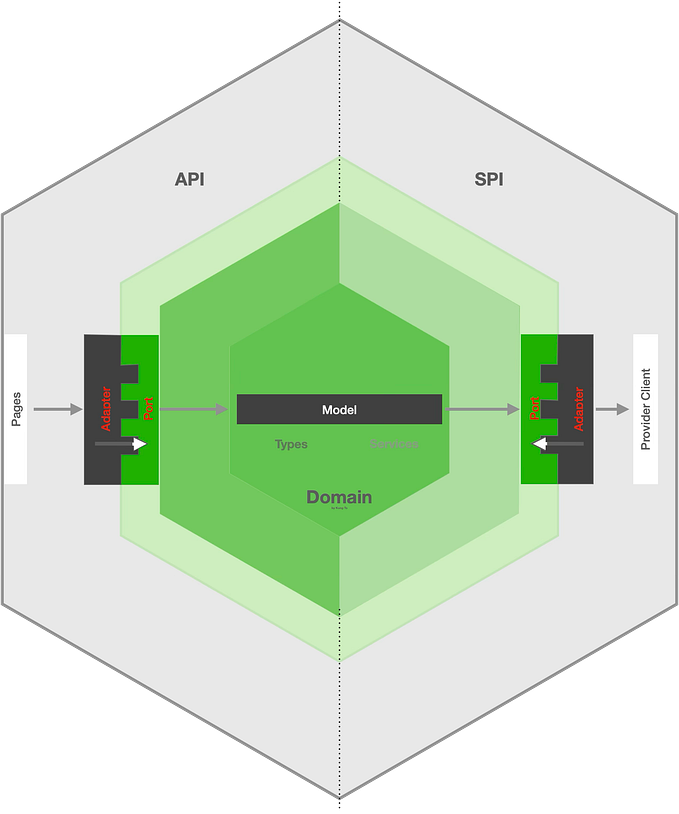

Currently, we have almost 250 packages, and we placed our packages in a similar structure described in the following image

So, what did I learn during this process? Here are some of the pros and cons:

Pros

A monorepo can speed up your development and improve the developer experience if it is well-structured and most importantly powered by good tooling.

- Great for standardization: We don’t have multiple configs or build processes anymore. Developers use the same code and commit standards company-wide. A monorepo approach reduced our technical debts. We’re sure every application is using the same configurations. For example, if we update our browser support, we know every part of the company gets the same browser support.

- Code sharing in seconds: It is very easy to create new shared libraries and share code using pnpm workspaces feature and some internal boilerplates.

- Common development environment: It is easy to create common commands and standardize the development environment to a single command.

- Better onboarding: Newcomers can adapt easily with a single, well-structured codebase.

- Easier refactoring: You can send your commits to multiple projects at once so you don’t need to wait for another PR to be merged with another repository.

- Reduced total build times: Orchestrator tools like NX help you to reduce your build times using computational caching algorithms. It builds and caches build outputs both locally and remotely. Your total build time decreases, especially for shared libraries.

- Dependency Awareness: You can effortlessly see how your application and libraries are connected to each other. Developers are aware of which applications or libraries will be affected by their commits. We’re also sharing this information via a custom GitHub Action for improving awareness.

Cons

Monorepos also have some drawbacks. It is important to keep your monorepo from becoming a monolith.

- Hard to migrate existing repos: One of the hardest parts is to import existing repos to a new monorepo. The existing repo needs to be ready with your new monorepo requirements. Before the import phase, you should update all configs and dependencies to your new monorepo-compatible versions which is a pain and sometimes take weeks.

A tip: You can import your existing repo and preserve the existing commit history (if it is important to you) using the Lerna import tool. - Working times: It becomes harder to make critical changes if you are changing an actively working system or if the source repo is actively in development. You probably need to do most of your work on weekends or general off-times if the source repo is actively in development so you don’t interrupt business operations :)

- Managing build queue: Our main branch gets 300 commits in a regular day. We’re following trunk-based development but if we allow all developers to directly push to the master branch in monorepo, they may race to push their commits, creating huge build queues. If someone fails on the trunk, development stops. We have to use short-lived feature branches to keep our trunk-based development working.

Basically, we make the build and test phases on pull requests and use the main CI job only to sync to the servers. We need this in order to scale this system.

We’re using Github’s auto-merge option and a custom-build solution to scale project builds. In this approach, if a PR fails, we don’t fail. The main job still becomes clear.

- Hard-to-debug caching: We aim for our build times to be under two minutes. We had some issues with NX computational caching — caches were becoming invalid even if the related module didn’t get an update. This resulted in increased build times, and we had difficulty finding the root cause.

- Less freedom: People like new technologies, and it is easier to try new things with a single repository. If you want your monorepo to keep up with standards, it becomes harder to apply new things. For example, we wanted to use the same dependency versions across our codebase. If someone wanted to upgrade a package (e.g. React), they needed to update the whole codebase. Of course, you can also allow this in monorepo, but it negates the purpose of a monorepo approach.

Conclusion

Our frontend monorepo approach improved our overall developer experience, but you should know the pros and cons before deciding to go this route.

I believe some steps in this article, like orchestration and build tooling deserve further explanation. I look forward to writing in-depth articles on these topics soon!

Feel free to send a message; find me on twitter/sbayd or LinkedIn.

Originally published at BetterProgramming.